About the client

Our long-time client from Europe (NDA) develops business support systems BSS solution for communications service providers. We’ve already supported them with one of their core, a corporate ERP system. Our team helped the client meet the demands of a telecom operator by modernizing legacy solutions, speeding up development, and designing a step-by-step test plan. As a result, the client was ready for market expansion, as the digital transformation of the system could handle higher loads, more complex processes, and large volumes of data.

The challenge: manual testing couldn’t keep up

We had been working with this client for one and a half years, and at some point, manual testing was no longer sufficient.

The project initially involved:

- Our team: 3 engineers, a database specialist, a QA, a manager, and a frontend developer.

- A parallel team: a database engineer, an ETL engineer, a full-stack developer, a frontend developer, DevOps engineer, and a product manager.

As the functionality grew with each sprint, so did the number of regression scenarios. After 9 months, the client requested a second tester. Nevertheless, manual testing still couldn't keep up with the load.

Moreover, we needed to engage the junior engineer to help with the testing process. They started dedicating 50% of their time to testing and then transitioned to full-time QA support due to the large volume of work to be done. Despite these efforts, manual regression cycles were still challenging; two-week sprints were delayed because QA simply couldn't finish in time for release due to objective reasons.

The most critical finding for the client was that mobile testing wasn’t happening at all. Desktop testing couldn’t be fully completed with the available resources, let alone mobile testing.

Why automation testing was the best choice

The client’s situation presented several factors that led us to recommend test automation:

- Multiple platforms requiring testing (web and mobile)

- A large user base and high-performance demands for the UI

- Testing is performed manually, and automation is limited

- Release delays became devastating due to manual regression testing

Test automation was the right solution primarily from the business perspective:

- The client required an increase in testing speed. This reduces the time needed to verify product functionality and accelerates the release of new versions.

- There was a need for BDD automated testing for customer account modules to improve scenario clarity and ensure business logic validation across complex workflows.

- The demand for higher testing accuracy is required. This helps improve product quality and ensure more reliable results.

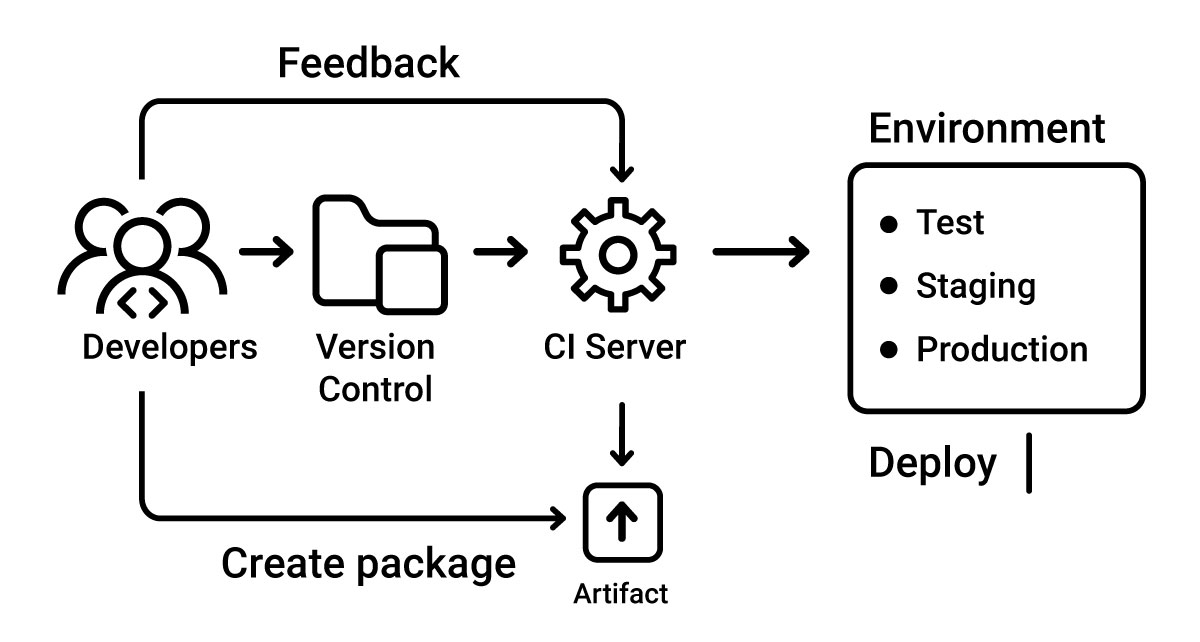

- To support an asynchronous process. Automated testing for CI/CD ensures a stable testing workflow and simplifies the detection of defects.

How we approached the case: CI-driven core framework for automation testing

QA & Automation Testing are among our primary areas of expertise. To deliver the best automation testing, our team proposed and implemented a CI/CD test automation for a telecom billing platform, which enabled us to:

- Freed the DevOps engineer from QA duties

- Implemented automated testing on several devices with different characteristics

Optimized coverage included:

- Automated regression testing for C# enterprise systems with asynchronous launch

- Behavior under different network conditions

Cross-device UI testing at scale

We performed load testing automation on different OS of the latest five different mobile device brands, including Apple, Samsung, Xiaomi, Google Pixel, and OnePlus. Network speed varied, testing behavior under different Android versions, RAM and storage limits, and screen resolutions.

Scenarios covered complex UI transitions, page load validation, and interaction with dynamic tables and forms.

Each delivery was accompanied by the automated execution of complex test cases.

Scenario example

A Feature is a part of business logic to be tested, as specific or as general as needed for convenience.

The framework automatically detects all relevant elements for interaction and verification. It also enables the automated collection of all user activity metrics.

All scenarios within a feature can be parameterized for combinatorial testing using Examples.

Examples may be a finite list or a range of input parameters, depending on the scenario.

How the CI-driven core framework behaves

The framework involves minimal code, as scenarios are written in business language using the domain-specific language of the industry. All BDD test scenarios are designed to stay relevant across future releases.

In addition, the framework doesn’t depend on specific front-end layouts (like CSS/HTML), making tests more stable. It also provides full traceability across key modules, Billing, Reports, ReportKit, and TeleMarket, so we can track and test core features reliably as the system grows.

Based on a customizable list of “suspicious” keywords, the framework automatically attempts to detect them on the page in the background, highlight them, and include them in screenshots for reporting.

The NetLS team received success reports. The client was notified only in case of failures on production.

Automation strategy

Integration testing

Integration testing is performed to verify the connections between components, as well as the interaction with various parts of the system.

In the project, all subscribers are spanided into groups. For each group of subscribers, we can assign a discount and a tariff plan. Therefore, it is necessary to test the interaction between the components: “Tariff Packages” – “Subscriber Groups” – “Group Discounts.”

It’s also possible to verify the interaction between the components: “Tariff Packages” – “Tariff Plans” – “Standard.”

System testing

The main task of system testing is to verify both functional and non-functional requirements of the system as a whole.

For example, on the “Tariff Packages” page, you can check the following modes: “Add,” “Edit,” “Save,” “Delete.”

In addition, by using filters on the “Tariff Packages” page, you can verify how the result in the table changes and whether it is possible to edit, save, or delete the data.

Acceptance testing

A formal testing process that checks whether the system complies with the requirements and is conducted to:

- Determine whether the system meets the requirements and business needs.

- Make a decision by the client or another authorized person regarding the deployment of the latest version of the project to the production server.

Applied technologies and tools

As a part of our technology consulting services, we supported our client’s project with a reliable tech stack that provides a competitive advantage. We used:

- C# – for backend automation logic.

- BDD frameworks – to enable business-readable test scenarios.

- Selenium Grid – for parallel, cross-platform UI testing.

- Azure DevOps pipelines – to automate test execution and delivery.

- Bootstrap – for responsive, mobile-first UI design and consistent styling.

- SharePoint – for centralized collaboration, document management reporting of version solution, and workflow integration.

- JQuery – to simplify DOM manipulation and enhance client-side interactivity.

- Reqnroll – is an open-source Cucumber-style BDD test automation framework for .NET. It has been created as a reboot of the SpecFlow project.

- SQL Server Profiler – to monitor database events and optimize query performance.

- Angular – for dynamic, component-based front-end development.

- Debugger – to trace and fix application logic in real-time during development and testing.

- TeamCity – for continuous integration and build management for pre-production environment.

- Gherkin syntax – for consistent, domain-specific test definitions.

- Azure monitor – for monitoring, collecting metrics, and logging in a cloud environment.

Planning

The first phase lasted 3 months:

- Automation QA/QC Engineer – 320 hours

- .NET Engineer – 200 hours

- DevOps Engineer – 80 hours

- Project Manager – 160 hours

Based on the technical specification, our QA engineer outlined the key user flows to be covered by automated tests. Automation QA engineer helped reduce costs. Instead of manually testing each release, tests are now triggered automatically via the CI/CD pipeline right after each commit. Once written, automated tests save dozens of hours of manual work in every sprint.

The active participation of the project manager was critical. The manager coordinated scenario reviews with client-side stakeholders to ensure a shared understanding of what would be tested, agreement on the input data and expected outputs, and a unified vision of how core features should function from both business and technical perspectives.

During the planning, we clarified the BDD grammar alignment. Together with the client, we defined and approved a standard format for describing scenarios. As a result of our efforts, we started with all the statues being accepted.

The second phase primarily focused on support. The minimum number of support requests, only three micro-updates in one and a half years, reflects the effectiveness of our implementation.

Results and business impact

In 4 months, the client received fully written automation scripts that eliminated the need to handle repeatable, data-intensive, and time- and resource-consuming testing.

Ultimately, by transitioning from manual to automated testing, the client was able to:

- Timely delivery cycles. All new versions of the solution are delivered on time according to the approved roadmap.

- Implement QA automation as a continuous process integrated into the CI/CD pipeline. As soon as a new code is committed, it triggers an automated workflow: tests are queued and executed asynchronously.

- Minimized human error in QA. Automation detected more issues earlier and with greater precision, making the process more cost-effective and the product more reliable.

- Expanded mobile testing coverage. Testing across multiple popular devices ensured the smooth execution of automated tests, accurately handling load performance, and maintaining consistent behavior across various resolutions, Internet speeds, and RAM.

- Improved release stability and product quality. Fewer bugs in production meant less downtime and better trust in the product.

After cooperating with us, companies in different industries are empowered to:

- Demonstrate the release candidate by the development team at the end of each sprint, with the ability for the client to approve or reject the release.

- Conduct UAT (User Acceptance Testing) by the client after deployment to the production environment.

- Introduce changes and adjustments after UAT and before the start of the next sprint.

- Implement automation without hiring in-house specialists, with ongoing support and the option to extend test coverage with new scripts as needed.

- Enhance customer loyalty through a well-tested and properly configured product that improves user experience and satisfaction.

- Drive revenue growth due to an expanded client base and higher-quality service delivery.

- Replacing manual testing with automation helped reduce expenses and improve the business management process.

- Improved product quality is achieved through consistent, automated validation, which ensures fewer bugs in production.

- Stronger market position thanks to faster delivery cycles, improved functionality, and a more reliable product.

Learn now how our automation test services using Reqnroll framework for .NET can elevate the testing process and help you gain a business advantage. Request Our Pricing | NETLS to provide us with the details of your needs, and book an intro call to discover how our automation solutions can align with your business goals.

Yuliia Suprunenko